Hi,

We are currently evaluating Handsontable. One of our requirements is to be able to show a table with a large number of rows. We will not be populating the data in all the rows, only a small subset.

This appears to work quite well, in the JSFiddle below we create a table with 100,000 rows and populate data in the first and last row:

https://jsfiddle.net/chrisvisokio/ex619yL3/

If you scroll the table you can see that the first and last rowsare populated, and you can scroll nicely to the bottom of the table.

However we need to be able to show more records, and this is where we encountered the problem. If you edit the fiddle and change the length to 500,000 rows (data.length = 500000), the table will still render, but it is very slow to scroll. So it appears as the number of the rowsin the table increases, the performance decreases. We would not expect this to occur if there is no data.

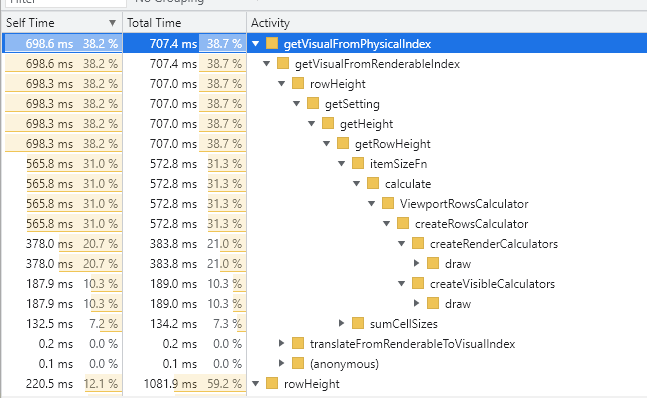

Profiling the performance indicated that there was a bottleneck in calculating row height (possibly in ViewportRowsCalculator) - although we did try passing in a fixed row height, this did not resolve the problem.

Any help you could provide here would be appreciated. Ideally we would like the table to perform nicely with 1M+ rows.

Regards

Chris